Drupal behind Amazon Cloudfront

Cloudfront is a global cache for websites. We are using it with the Drupal CMS and in this post we share our experiences about how to set it up, how to debug it, it's strengths and weaknesses and also some common pitfalls.

When your website is intended to reach anonymous visitors globally

it's clear that it is worth using something like Cloudfront. It's also called CDN and it's quite accurate to define it as a global reverse proxy infrastructure available as a service from Amazon Web Services. Even for a small blog it can be beneficial to use a global CDN. For a corporate site which is typically receiving low traffic, but rarely, during e.g. marketing campaigns lots of visitors appear suddenly a CDN is a good solution. Of course for a news portal it's typically a must to use some kind of reverse-proxy caching.

The clear benefits:

- Low-latency - the content will be served from the nearest datacenter of the provider.

- No bandwidth limit - it's fully scalable, it will handle even the largest unpredicted traffic peaks.

- DDoS attack mitigation.

- Lowered resource needs for your servers.

Serve the World with the smallest server

I do like the idea of static content generators like Jekyll. They are simple and fast out of the box. But when you need the flexibility or features a CMS like Drupal 8 can offer, you can still achieve the same or even better performance by adding a reverse-proxy.

The idea of a good reverse-proxy setup is that the max age of the content in the cache should be extremely large (like 1 year or more) and a properly working invalidation mechanism should take care of the changes to the content, so normally anonymous requests will not even hit your server.

Simply put your site behind a global CDN like Cloudfront and if it's about serving content for anonymous visitors you are ready to compete with the largest sites of the world.

Just keep an eye on your cache hit rate and think twice before you clear your caches, because it will empty your CDN too!

Anonymous traffic will NOT hit your server!

This is a good thing, this is what saves your server resources and let you scale. But you need to keep this in your mind when you create features or install modules which rely on the idea that every (even anonymous!) page request will run server side code.

Examples of bad ideas from this perspective:

- The Drupal core statistics module or the Node view count contrib module will bypass any reverse-proxy by sending AJAX POST requests to count statistics. As an impact of this as your traffic grows, your server resources must grow too, so your easy scalability is gone. The Google Analytics Counter module is a good alternative solution for this purpose.

- Showing random content on a page generated server-side: the first randomly generated content will be captured by the cache and will be served for everyone else.

- Using anonymous sessions for any functionality: this will either be broken or if the requests with anonymous session will bypass the reverse proxy your scalability is gone.

If you need to serve personalised content but still want to leverage caching consider using the auto-placeholdering system of Drupal 8 and the BigPipe module which will allow the "static" parts of your page to be cached effectively. This will require a scalable backend too for the dynamic parts, but of course in some use-cases this is inevitable.

Set up Cloudfront

For configuring Cloudfront refer to the official documentation and there is a great post specifically about Drupal 8 settings which is still valid so it would not make much sense to repeat it here.

The additions we made was a change to the Default (*) behavior, when a google adwords campaign spammed the invalidation queue appending a gclid or _ga parameter to the urls. Unfortunately Cloudfront doesn't allow blacklisting or removal url parts or parameters, only the opposite is allowed. So we are ignoring all url parameters. We have clean urls so we don't need them right?*

Query String Forwarding and Caching: None (Improves Caching)

*In order to keep our admin functions working we had to create new "Behaviors" to whitelist url parameter forwarding on certain pages, namely:

*/edit, */add/*, */editor*, /editor*, */entity-embed*, /entity-embed*, /entity_reference_autocomplete/*, */entity_reference_autocomplete/*, /video-embed-wysiwyg/*, */video-embed-wysiwyg/*, */admin/*, /token/tree*, */token/tree*, and sites/default/files/styles/* was also needed because of the "itok" security parameter applied by Drupal to image styles. We used the same settings we have in the */ajax/* behavior.

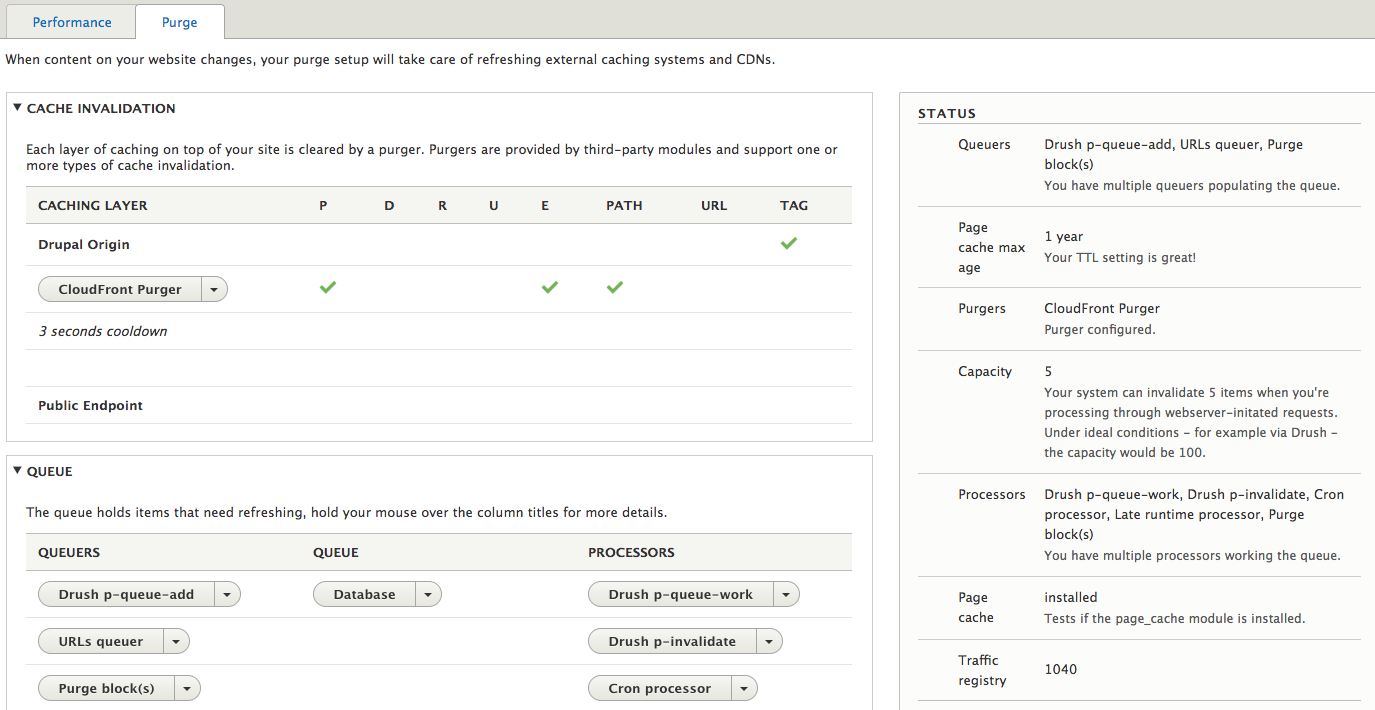

Set up the Purge and Cloudfront purger Drupal 8 modules

The purge module facilitates cleaning external caching systems, reverse proxies and CDNs.

This is a middleware module, for specific caches separate modules are responsible. It can handle multiple purgers, so it's easy to set up to invalidate multiple Varnish instances, but in our case for Cloudfront, we need the Cloudfront purger . It only needs the cloudfront distribution id and I recommend to put it in settings.php instead of using the admin UI:

$config['cloudfront_purger.settings']['distribution_id'] = 'YOURCLOUDFRONTDISTRIBUTIONID';

The purge module handles an invalidation queue, multiple queuers can feed the queue and multiple purgers can consume it. The Drupal 8 caching system is leveraging cache tags, but unfortunately Cloudfront does not support them (yet), thus the purge url queuer is needed to break down the tag based invalidations to urls.

A lesson learned: there is only a single invalidation queue, tag and url based invalidations are stored at the same place. When I left enabled the core tags queuer, but on the other side there was no tag based purger, even the url based invalidations stopped working. After debugging it turned out that the purge module grabs the first X (100 per drush pqw) lines of the queue and distributes it amongst the purgers. But when all the 100 lines were only tag based, the url based cloudfront purger never received a single url for invalidation any more.

Check invalidation in Drupal

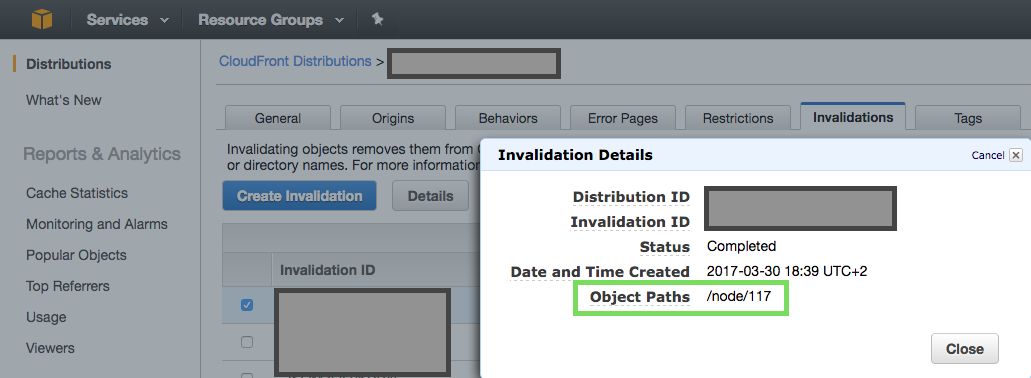

Check invalidation in Cloudfront

It usually takes 10 to 15 minutes for CloudFront to complete your invalidation request, depending on the number of invalidation paths that you included in the request according to Amazon's documentation.

10-15 minutes is a painful disadvantage. In comparison the open source Varnish reverse-proxy can invalidate objects immediately. Fastly and Cloudflare are amongst the competitors who offer cache-tag based quick invalidations too.

With the Late runtime processor (submodule of Purge) Drupal sends the last modified urls immediately to Cloudfront. If there are many urls to invalidate, a cron job set up to run drush pqw each minute will quickly free up the queue, but the content updates will show up to the end users only after Cloudfront finishes the invalidation jobs.

Command line tools and final checklist

There are some handy drush commands provided by the Purge module.

We run drush pqw every minute to make sure that the queue is emptied quickly. It's processing 100 urls per run.

To quickly check the status of your queue there is the drush pqs command, but for us the "The total number of items currently in the queue" is wrong, it never gets decreased, so we check directly the database queue with drush sqlq "select count(*) from purge_queue ";

- If the queue is empty, that's good.

- If there are items in the queue, but after running drush pqw it gets decreased, that's good.

- When you check watchdog for errors and you only find "Successfully invalidated" messages you are almost there.

- Then when you check Amazon and the same invalidations are there as completed you can check your live site for the latest changes.

Verdict

Cloudfront is not cheap and it's not easy to calculate it's fee , moreover the number of invalidation requests are also added somehow to the bill. The lack of the cache-tag support makes this even more painful, because the currently only available working setup is creating much more invalidation requests based on urls than it would be necessary via cache-tags. Then there is the average 10-15 minutes invalidation time.

On the other side Cloudfront is a massive, perfectly scaling and reliable infrastructure from the largest global cloud provider. Hopefully they will introduce cache-tag support soon.